Solution of Micro Intelligent Computing Center in University

Follow me on:

Background analysis

The number of parameters of artificial intelligence large models is growing exponentially, from a “large model” of hundreds of billions to a “super-large model” of trillions. This trend shows that the technology development in the field of artificial intelligence is accelerating, and the scale and complexity of models are also increasing.

With the increase of the model size, the required computing resources and storage space also increase, which puts forward higher requirements for hardware and infrastructure. There is a need for an efficient, reliable storage solution that is compute-separate and scalable to meet these challenges.

Deployment options

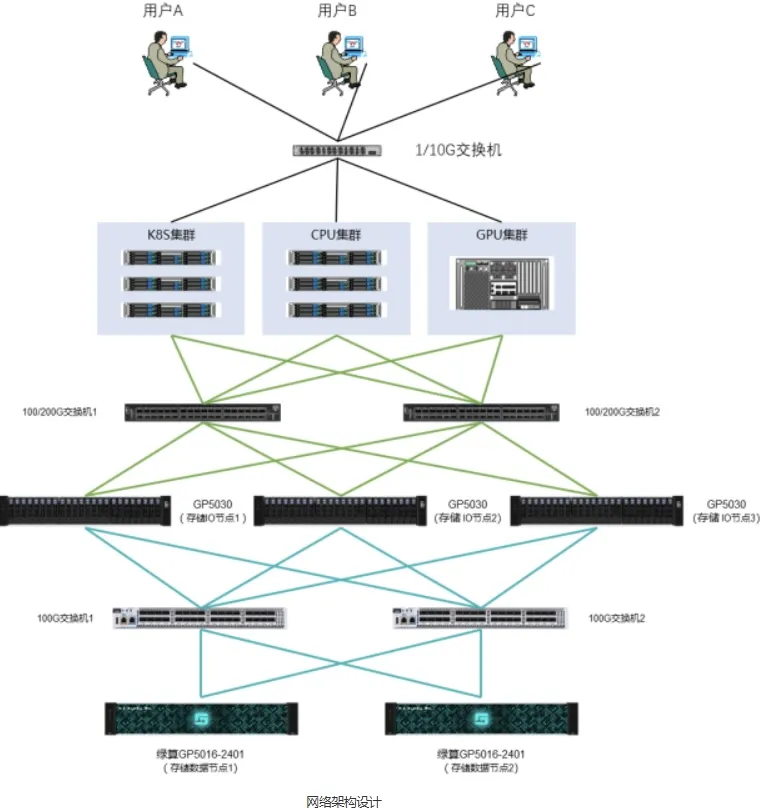

Storage Intranet: Data transmission network between storage IO nodes and data nodes. Each storage IO node provides two 100 G RoCE v2 ports, and each storage data node provides six 100 G RoCE v2 ports, which are connected to the storage intranet switch.

Storage external network: data transmission network between storage IO node and K8S cluster, CPU cluster and GPU cluster node. Each node provides two 100 G RoCE v2 ports to connect to the storage extranet switch.

Computing network: high-speed communication network between GPU nodes, multiplexing storage network. Optional InfiniBand standalone networking.

Management network: management and monitoring of each device, and multiplexing storage external network. Optional gigabit independent networking.

Program Value

●Reduce data preparation time before AI training

LUISUAN High Performance Storage delivers high throughput and high IOPS capabilities in the face of massive amounts of multimodal data from a variety of sources, dramatically increasing data write speeds. Data collection time for AI training has been reduced by 80%, from about 10 days to just 2 days or even a few hours.

●Improve the loading speed of AI training sets

In the multi-modal mass sample training, through NVIDIA’s GPU Direct Storage technology, the LUISUAN high-performance storage helps AI training to improve the data loading speed, reducing the training time that originally took months to about a week.

●Ensure the continuous and stable operation of AI training

In the training and tuning of tera-scale parameters, frequent interaction between the storage system and the computing system may lead to the interruption of training. Through fully redundant hardware architecture, multi-path mode and redundant design of storage network, LUISUAN High Performance Storage can improve reliability by 90%, thus reducing training interruption caused by storage failure.

●Reduce the difficulty of AI deployment

The LUISUAN high-performance storage system is deeply optimized for AI training and reasoning, and can be perfectly combined with it. Support for hybrid cloud (offline and cloud) deployment models to quickly build AI infrastructure environments.

Related products

LUISUAN LinePillar FS Parallel File System

●Safe and efficient

Metadata nodes support Active-Active pairing mode, which realizes the mutual backup of metadata among metadata nodes to ensure the continuity of metadata service. Support global erasure code, storage space utilization rate can reach more than 90%.

●Large amount of small file optimization

Support the unified storage and efficient retrieval of tens of billions of files. Innovative small-file Container storage technology increases the efficiency of creating and retrieving small files by more than 10 times over traditional storage.

●Rich access interfaces

Provides a comprehensive set of storage interface protocols including file storage (POSIX, NFS, CIFS), block storage (iSCSI), object storage (S3, Swift), big data (HDFS), and container storage interface (CSI).

ForinnBase GroundPool 5000 EBOF

ForinnBase GroundPool 5000 EBOF (GP 5000 for short), by perfectly integrating the current high-speed flash memory transfer protocol, adopts the special ASIC chip for storage, and realizes the data protocol offload and encapsulation. It has the characteristics of low delay, low power consumption, high throughput, large capacity, easy expansion and so on.